Revolutionizing AI Deployment: DeepSeek-R1 on Amazon SageMaker

As organizations increasingly leverage artificial intelligence to drive industry transformation, deploying advanced machine learning models efficiently becomes critical. The recent release of DeepSeek-R1 distilled models on Amazon SageMaker presents an exciting opportunity for leaders aiming for impactful AI integration in their operations. With its remarkable capabilities and scalability through SageMaker's managed services, utilizing DeepSeek-R1 enhances both performance and cost-efficiency in generative AI applications.

Unraveling DeepSeek-R1: A Game Changer

DeepSeek-R1 stands out as a sophisticated large language model (LLM) crafted with cutting-edge reinforcement learning methodologies, enabling it to refine responses through continuous user feedback and interaction. This model effectively combines traditional training methods with advanced functionalities, showcasing features that allow it to analyze complex queries with clarity and precision, a process often termed chain-of-thought (CoT) reasoning. Such enhancements empower organizations to deploy models adept at fulfilling nuanced business requirements.

Efficiency and Flexibility with Distilled Models

The advent of DeepSeek-R1 distilled models, which significantly reduce parameter requirements without compromising capability, marks a paradigm shift in AI scalability. By distilling the main R1 model’s extensive functions into varied configurations—ranging from 1.5 billion to 70 billion parameters—businesses can tailor deployments to fit diverse application requirements. Integrating these models on Amazon SageMaker not only augments operational efficiency but also democratizes access to advanced AI capabilities.

Integrating DeepSeek-R1 into Workflows: Step-by-Step Deployment

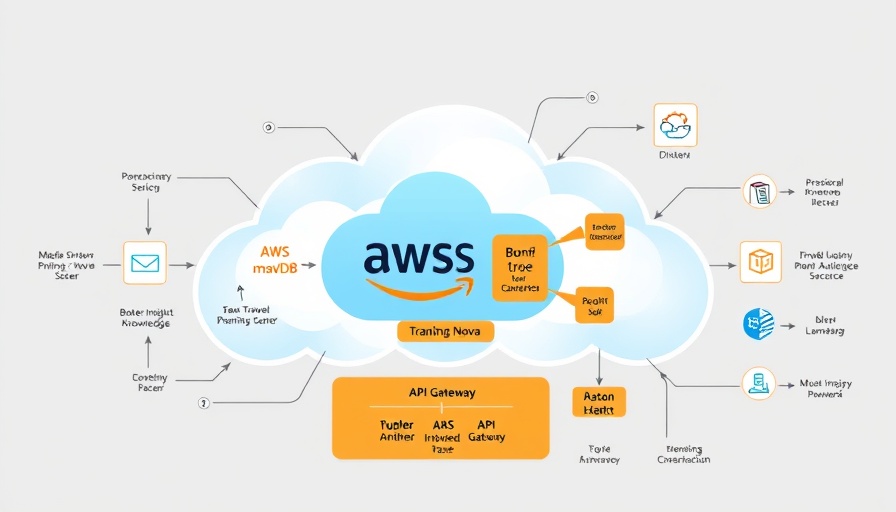

Organizations can easily deploy DeepSeek-R1 models on SageMaker via several routes, leveraging AWS’s exhaustive infrastructure for seamless operation:

- Using the LMI Container: This high-performance container facilitates efficient inference by harnessing advanced open-source libraries, perfect for deploying large models.

- Hugging Face Essentials: Deployments can also occur directly from the Hugging Face model hub, allowing teams to utilize popular pre-trained models and integrate them swiftly.

- Catering Security Needs: SageMaker’s robust security features, including virtual private cloud (VPC) settings and IAM roles, ensure that sensitive organizational data remains protected during and after deployment.

Cost-Effectiveness and Performance Optimization

Amazon SageMaker’s flexible pricing structure combined with DeepSeek-R1’s efficient architecture brings forth an economically sound solution for organizations. Users can achieve optimal performance through appropriate instance selection, ensuring high throughput and responsiveness. Employing automation for cost management can further enhance financial viability, especially in large-scale inference scenarios, preventing resource wastage.

Best Practices for Engaging with DeepSeek-R1

To maximize success and mitigate potential risks, organizations are encouraged to implement several best practices when deploying DeepSeek-R1 models on SageMaker:

- Leverage guardrails to validate model outputs and maintain safety standards.

- Consistently monitor performance and adjust configurations based on evaluation metrics.

- Initiate deployments within private networking solutions to safeguard against external threats.

Conclusion: Embrace the Future of AI with DeepSeek-R1

Deploying the DeepSeek-R1 distilled models on Amazon SageMaker not only equips organizations with advanced AI capabilities but also enables them to do so in a secure, efficient, and cost-effective manner. As leaders in their respective industries watch for strategic AI transformations, turning to these innovative solutions may very well mark the next significant leap in their operational effectiveness.

Add Row

Add Row  Add

Add

Write A Comment