Empowering Organizations with Contextual Question and Answering

As organizations increasingly gravitate towards artificial intelligence, the integration of advanced language models like Large Language Models (LLMs) has transformed the way businesses engage with data. Amazon Bedrock emerges as a crucial tool in this evolution, enabling leaders to optimize LLMs for context-based question and answering, significantly enhancing decision-making processes.

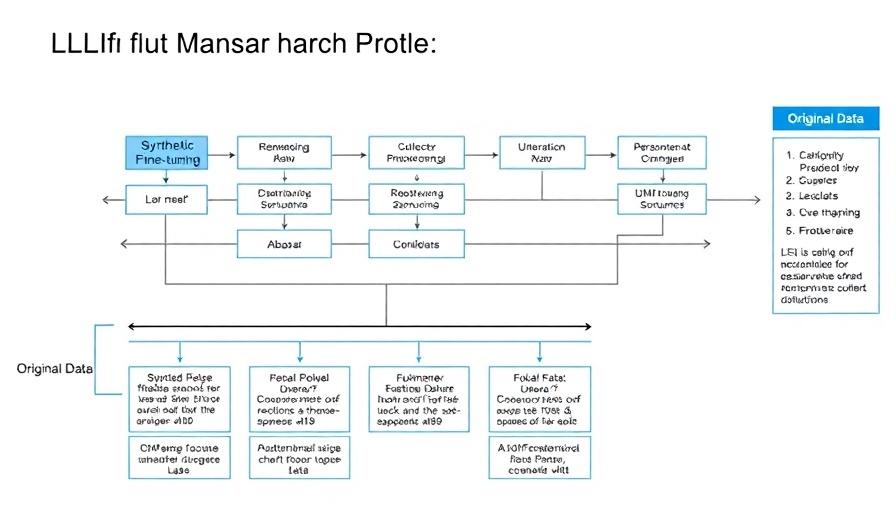

Why Synthetic Data Matters for Training LLMs

Synthetic data is not just a trend; it is a game-changer for developing robust LLMs. As organizations gather vast amounts of data, training these models on high-quality synthetic inputs can fill gaps in real user queries, enabling models to learn more effectively. This method not only broadens the scope of understanding but also mitigates biases in the training data, allowing for a more comprehensive response system tailored to specific organizational needs.

The Strategic Advantage: Tailoring LLMs to Specific Contexts

CEOs, CMOs, and COOs must grasp the strategic advantages of fine-tuning LLMs through Amazon Bedrock. The ability to adapt these models to various industry contexts allows organizations to deliver more accurate and relevant responses to stakeholders. This contextuality ensures that the information provided is not only precise but is grounded in the specific nuances of the industry, ultimately driving better outcomes.

Driving Organizational Transformation with AI

Implementing AI-driven solutions like Amazon Bedrock can catalyze profound organizational transformation. For executives seeking to lead their companies into a future shaped by technology, understanding how to leverage AI tools for enhanced customer engagement and operational efficiency is essential. Fine-tuning LLMs encapsulates a proactive approach, setting businesses apart from competitors who lag in adopting these innovations.

Future Insights: The Evolving Landscape of AI in Business

As AI technology continues to advance, organizations that embrace these tools will likely see significant changes in their operational landscapes. The potential for LLMs to evolve alongside the data they process indicates that future implementations could lead to even greater capabilities in understanding and responding to customer queries. This adaptability could firmly situate forward-thinking companies at the forefront of their industries.

Conclusion: Elevating AI Integration Strategies

The insights gleaned from utilizing Amazon Bedrock highlight the critical intersection of technology and strategy within business. Executives who recognize the transformative power of AI in handling context-based Q&A can position their organizations as leaders in innovation and efficiency. By investing in synthetic data training and fine-tuning LLMs, these leaders will pave the way for future success and operational excellence.

Add Row

Add Row  Add

Add

Write A Comment