Unleashing the Power of AI with Amazon SageMaker HyperPod

The landscape of artificial intelligence (AI) is constantly evolving, and organizations are actively seeking tools that provide both flexibility and efficiency in model deployment. Amazon has just innovated this space with the launch of SageMaker HyperPod, which revolutionizes how foundation models (FMs) are deployed. By supporting deployment from Amazon SageMaker JumpStart and custom models from Amazon S3 or FSx, this platform enables companies to streamline their entire model lifecycle, from training and fine-tuning to deployment.

How SageMaker HyperPod Enhances Model Development Lifecycle

Amazon SageMaker HyperPod is not merely an updated feature; it’s a full-fledged infrastructure overhaul designed for large-scale model training. Since its introduction in 2023, HyperPod has been a game changer for organizations looking to minimize costs, reduce downtime, and expedite their path to market. Businesses like Salesforce and Hippocratic are already harnessing this technology to facilitate their AI ambitions. This solution allows them to maximize resource utilization across the model lifecycle, signifying a significant leap in operational efficiency.

Kubernetes Integration: A Seamless Transition

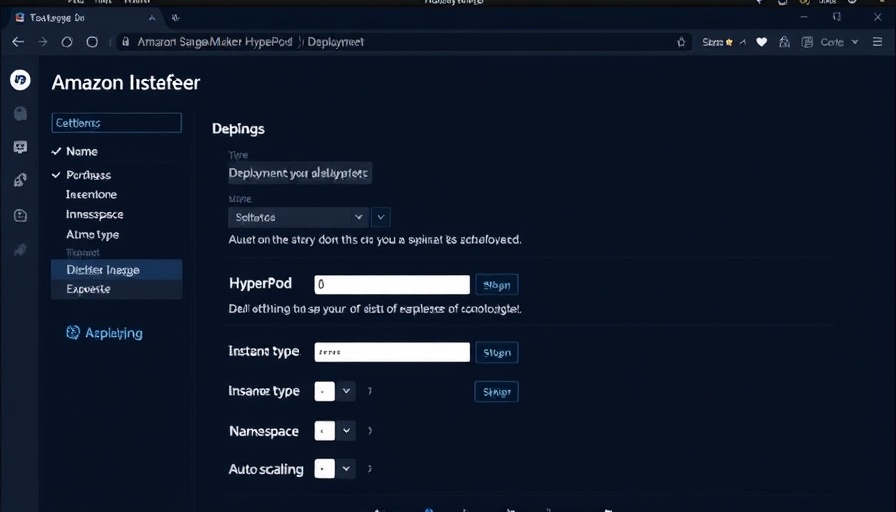

The integration of Amazon EKS (Elastic Kubernetes Service) in SageMaker HyperPod adds another layer of convenience for users. Kubernetes is highly favored for its flexibility and open-source capabilities, but deploying foundation model inference at scale can be challenging. With HyperPod, customers can orchestrate clusters effectively while retaining the familiar Kubernetes workflows. It is this unique fusion that turns complex backend management into a streamlined user experience. The promise of custom containers and shared compute resources further enhances collaborative efficiencies within teams.

New Deployment Capabilities: What You Need to Know

The most compelling aspect of this upgrade is undoubtedly the one-click foundation model deployment from SageMaker JumpStart. In an era where agility is paramount, being able to deploy over 400 open-weight models—such as Mistral and Llama4—at the click of a button cannot be understated. Additionally, the option to deploy fine-tuned models from S3 or FSx for Lustre caters to varied business needs, presenting a flexible framework that appeals to diverse expertise levels within teams.

Dynamic Scaling to Meet Demand

HyperPod's dynamic scaling capabilities allow businesses to adjust their resources in real-time based on deployment needs, thus ensuring optimal performance during demand spikes. This feature addresses a common pain point for many organizations engaged in AI, enabling them to adapt to fluctuating workloads without compromising efficiency. This ability to scale automatically not only improves the reliability of AI workflows but also reassures businesses of their investment in infrastructure capable of evolving alongside industry trends.

Conclusion: A New Frontier for AI Deployments

As organizations increasingly seek to leverage AI for transformation, tools like Amazon SageMaker HyperPod represent not just a technological advancement, but a paradigm shift in the operational capabilities of businesses. By reducing the complexity traditionally associated with deploying foundation models, Amazon enables firms to pivot towards more innovative uses of generative AI. CEOs, CMOs, and COOs looking to embed AI deeply into their business strategies should take note of these developments—embracing such innovations could well position them at the forefront of their industries.

Add Row

Add Row  Add

Add

Write A Comment