Revolutionizing AI Quality Assurance with Amazon Bedrock's New RAG Evaluation Tools

As organizations increasingly rely on artificial intelligence (AI) and large language models (LLMs) to enhance their operations, there are growing concerns regarding the evaluation of AI outputs, particularly when using Retrieval Augmented Generation (RAG) applications. Traditional evaluation methods often falter in reliability and scalability, making it crucial for leaders in AI deployment and innovation to explore more robust solutions.

Challenges with Existing AI Evaluation Methods

Conventional evaluation methods for AI outputs, such as human assessments, while thorough, are cumbersome and often not feasible for large-scale implementations. Automated metrics can yield faster results, yet they fall short in capturing nuances such as contextual relevance, helpfulness, and the potential for harmful outputs, leaving organizations with subpar evaluation frameworks. Furthermore, these automated approaches frequently depend on the availability of 'ground truth' data, which is notoriously difficult to define in open-ended generation scenarios.

Introducing Amazon Bedrock's RAG Evaluation Tool

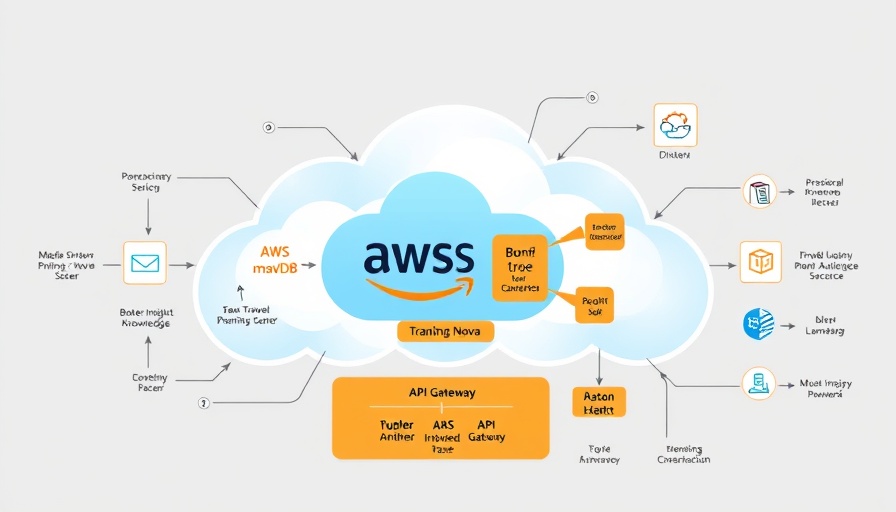

Amazon Bedrock has taken a significant step forward by launching new capabilities designed to simplify and enhance the assessment of RAG applications. The newly integrated LLM-as-a-judge (LLMaaJ) feature and RAG evaluation tool incorporate LLM technology to streamline the evaluation process. These enhancements allow organizations not only to maintain quality standards but also to do so at scale. By automating evaluations, Amazon Bedrock ensures that businesses can assess AI model outputs across different tasks and contexts, delivering a more comprehensive understanding of both retrieval and generation quality.

Streamlined Evaluation Lifecycle for Enhanced AI Practices

With these new capabilities, evaluating RAG applications through Amazon Bedrock becomes a more structured and reliable approach. Organizations can now:

- Systematically evaluate multiple dimensions of AI performance, ensuring insights into correctness, completeness, and harmfulness, thereby enabling responsible AI practices.

- Scale evaluations across thousands of AI outputs, dramatically reducing manual evaluation costs while maintaining high-quality standards.

- Access comprehensive metrics that are not only comprehensive but also provide natural language explanations, making them accessible to stakeholders who may not have technical expertise.

The Future of AI Evaluation: Implications and Opportunities

Citing the latest advancements in evaluation technology, CEOs, CMOs, and COOs can capitalize on the transition toward responsible AI through Amazon Bedrock’s RAG evaluation features. The long-term benefits point toward a future where AI solutions can be deployed and scaled with confidence, ensuring that output quality aligns with organizational goals. Additionally, embedding robust evaluation frameworks within the AI lifecycle catalyzes informed decision-making regarding model selection, optimization strategies, and potential applications.

Conclusion: Embrace the AI Evaluation Revolution

The launch of RAG evaluation and LLM-as-a-judge capabilities within Amazon Bedrock transforms the landscape of AI assessments. As organizations prioritize quality assurance in AI deployments, leveraging these advanced evaluation mechanisms will be critical in navigating the complexities of AI developments while fostering innovation. By integrating these tools into your AI strategy, you can streamline your AI applications and ensure that they meet the evolving demands of your business segment. Explore Amazon Bedrock’s latest offerings and take your first steps towards enhanced AI application performance today!

Add Row

Add Row  Add

Add

Write A Comment