The Impending Cuts at the US AI Safety Institute: What It Means for the Future of AI Regulation

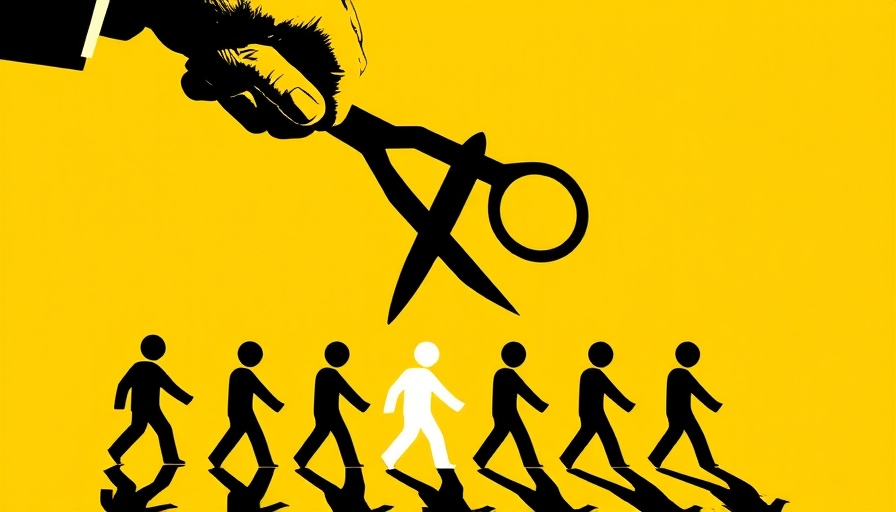

The recent news of potential mass firings at the US AI Safety Institute (AISI) raises significant concerns not only about workforce stability but also the broader implications for AI regulation in the United States. As the Trump administration gears up for dramatic cuts, the stability of AI oversight is being put to the test. With such cuts expected to deeply impact AISI and its affiliated National Institute of Standards and Technology (NIST), we must explore the ramifications for a sector that is becoming increasingly critical in our fast-paced digital landscape.

Why the AISI Matters

The AISI's role is pivotal in creating safe AI models while collaborating with key tech players, including major names like OpenAI and Anthropic. These partnerships are designed to ensure that AI helps rather than harms society—a mission vital for maintaining public trust and technological development. However, with looming staff reductions that could leave the AISI "gutted," its ability to uphold these responsibilities is at risk.

Broader Implications: Cutting Workforce and Innovation

According to Axios, around 497 positions within NIST, many tied to AISI, are slated for elimination. This goes beyond staffing; it challenges the integrity of the CHIPS initiative aimed at enhancing semiconductor development in the US as well. Losing a majority of the workforce dedicated to such crucial innovations may not only slow the pace of AI advancements but also put America at a comparative disadvantage to nations like China in a domain marked by fierce competition. As Chairman Frank Lucas emphasized, the agency is already facing strain under current funding pressures, making additional cuts highly damaging.

The Political Landscape: Reconciling Safety and Dominance

This shift comes in the wake of the Trump administration's narrative of prioritizing "AI dominance" over regulatory frameworks. Following a Biden-era executive order emphasizing careful AI oversight, these cuts signal a stark pivot towards deregulation. This raises critical questions about the balance between fostering innovation and ensuring safety—especially as AI systems increasingly permeate various industries. Many fear that sidelining the safety component jeopardizes effective oversight at a time when AI's potential social implications are becoming painfully evident, highlighted by real-world incidents involving AI-driven biases and unsafe practices.

Future Predictions: A Call to Action

The impending cuts at the US AI Safety Institute reflect broader national challenges that demand immediate attention from executives and policymakers alike. The delicate equilibrium between leveraging AI for competitive gain while securing its ethical deployment must be constantly safeguarded. Industry leaders must advocate for a strategic realignment that preserves the essential role of safety and regulatory oversight, positioning themselves not merely as beneficiaries of AI technology but as stewards of its responsible development.

Conclusion: Be Informed, Act Wisely

The situation unfolding at the AISI serves as a poignant reminder that the future of AI is not merely about technological superiority; it's about ensuring society's ethics and values are preserved. For executives and decision-makers, it's essential to stay informed about these developments and push for frameworks that encourage both innovation and safety. The actions taken today will inevitably shape the landscape of AI tomorrow.

Add Row

Add Row  Add

Add

Write A Comment