Reducing Latency in Conversational AI: The Game-Changer

The rapid evolution of generative AI has transformed conversational assistants, facilitating real-time interactions across various sectors, from customer service to education. However, these advancements come with pressing challenges in response latency, profoundly impacting user experience. Achieving response times that mirror natural human conversation—ideally between 200 to 500 milliseconds—requires a multi-faceted approach to integrate cloud computing with edge technology.

Understanding Response Latency and Its Challenges

Response latency encompasses two primary components: on-device processing latency and time to first token (TTFT). On-device processing represents the time taken for user inputs—like voice commands—to be processed locally, including speech-to-text (STT) conversion. Meanwhile, TTFT measures the interval from when a user prompt is sent to the cloud until the first word of the response is received.

This delay can significantly detract from the conversational flow, with TTFT being critical in enhancing user experience in AI interfaces, especially those utilizing streaming responses.

Leveraging Edge Computing: AWS Local Zones

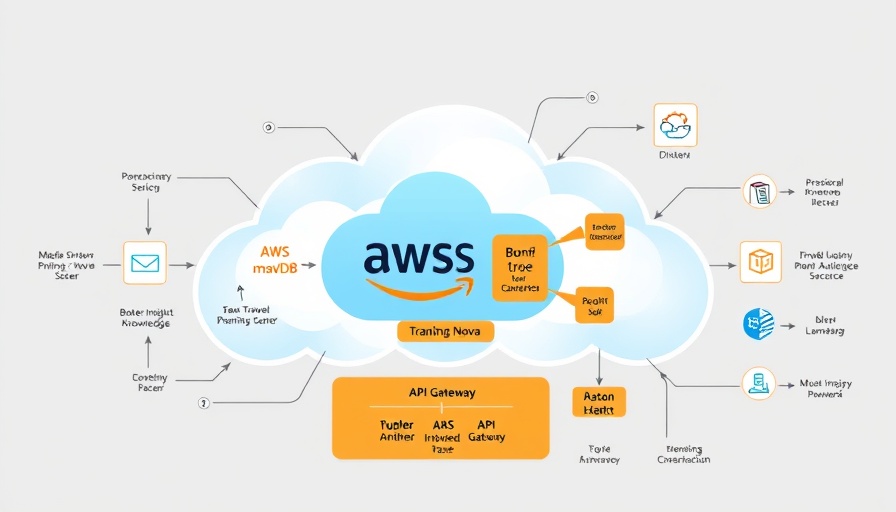

To combat the latency challenge, AWS has implemented Local Zones, extending AWS services closer to users by embedding them in metropolitan areas. This architecture minimizes network latency through dynamic traffic management, ultimately leading to faster response times when users engage with conversational AI applications.

By deploying foundation model (FM) inference endpoints via Local Zones, users see a dramatic decrease in TTFT. Benchmark tests showed reductions of up to 41% in Los Angeles and 42% in Honolulu when compared to traditional AWS Regions.

The Practicalities of Implementing Local Zone Architecture

To leverage AWS Local Zones, organizations must follow a series of steps to configure their infrastructure appropriately. This includes selecting the right instance types, managing VPC connections, and implementing dynamic routing policies to optimize end-user traffic.

For instance, deploying open-source models like Hugging Face's Llama 3.2-3B on AWS Local Zones proves particularly effective, combining efficiency with lower resource requirements essential for real-time applications.

Future-Forward: Predictions for Conversational AI

As the demand for instantaneous communication grows, advancements in AI latency optimization will likely continue to emerge. Companies will increasingly explore hybrid architectures and edge computing to enhance their AI applications. This might not only include further refinements in local processing but also innovative techniques in model deployment and data management.

For CEOs and CMOs, understanding these emerging trends can ensure they stay ahead in a market rapidly evolving around AI capabilities. The investment in technologies like AWS Local Zones presents a strategic advantage by providing smoother, more human-like interaction experiences.

Enhancing User Experience Through Continuous Improvement

The integration of edge services will dramatically affect how businesses interact with consumers, not only in AI responsiveness but also in customer satisfaction and retention. As we drive towards enhanced AI solutions, engaging users with seamless interactions will become a critical component of technology adoption and success.

Conclusion: The Road Ahead for Conversational AI

In conclusion, minimizing response latency in conversational AI is not merely about technology; it’s about the user experience and engagement. Edge computing through AWS Local Zones demonstrates tangible benefits in real-world applications. Organizations must actively explore these enhancements to maintain competitive advantage in the evolving landscape of AI technologies.

Interested in optimizing your own AI applications for enhanced performance? Explore how AWS Local Zones can lead your conversational AI strategy into the future.

Add Row

Add Row  Add

Add

Write A Comment