Revolutionizing AI Deployment with AWS and vLLM

In the constantly evolving landscape of artificial intelligence, deploying large language models (LLMs) efficiently has become a critical concern for business leaders. With AWS’s latest offering, combining vLLM and Amazon EC2 instances powered by AWS AI Inferentia chips, organizations can now anticipate a major shift in how AI is harnessed for transformative business operations.

Future Predictions and Trends

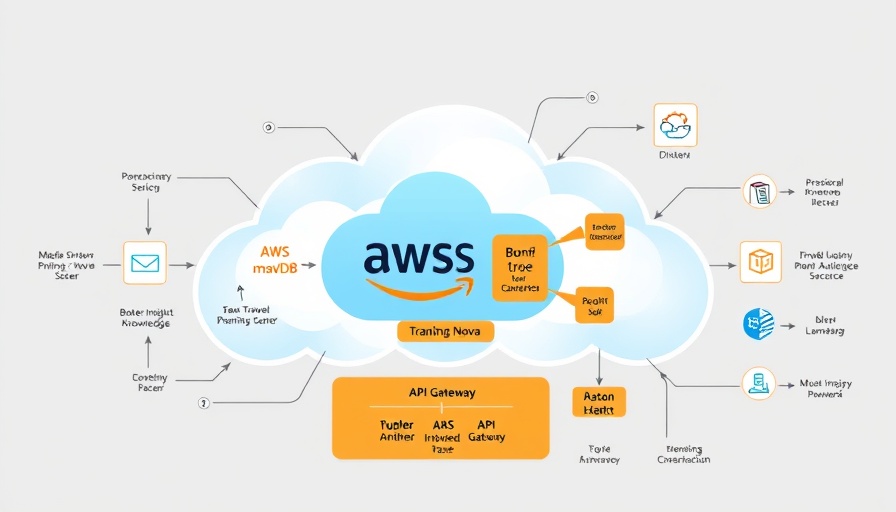

The integration of AWS Inferentia with vLLM represents a significant step forward, setting the stage for a future where AI applications are more accessible and cost-effective. As businesses strive to harness AI for strategic advantages, the trend towards using specialized hardware optimized for AI workloads, like AWS Inferentia, will grow exponentially. This shift promises not only to reduce costs but also to increase the scalability and efficiency of deploying LLMs, thus making AI more democratized across industries.

Unique Benefits of Knowing This Information

For CEOs, CMOs, and COOs aiming to capitalize on AI advancements, understanding this evolution in AI technology is imperative. By leveraging AWS’s capabilities, businesses can streamline AI deployment, thus enhancing decision-making, customer service, and product innovation. Those informed about these developments will be better positioned to implement AI-driven strategies that could transform organizational operations and competitive standing in the marketplace.

Actionable Insights and Practical Tips

To effectively utilize the capabilities of AWS and vLLM, leaders should begin by evaluating their current AI deployment strategies and identifying areas that could benefit from enhanced efficiency and cost reduction. Collaborating with AWS partners for tailored solutions and investing in training teams on these new technologies can maximize the potential benefits, ensuring your organization stays at the forefront of innovation.

Add Row

Add Row  Add

Add

Write A Comment