Revolutionizing Reasoning in AI: Insights from OpenAI's o1 and DeepSeek's Innovations

The landscape of artificial intelligence is witnessing a transformative phase with the emergence of advanced reasoning capabilities in large language models (LLMs). In September 2024, OpenAI introduced its "o1" model—a paradigm shift in how LLMs are trained to think and reason. Following this, the research lab DeepSeek published their insights on replicating this advanced behavior, showcasing a journey toward building AI systems that can genuinely ponder and process information.

The Concept of “Thinking” Tokens

At the heart of OpenAI's o1 model are what are referred to as "thinking" tokens, which serve as markers for the model’s chain of thought. These tokens delineate when the model begins and ends its reasoning. By allowing these intermediary steps, the o1 model not only clarifies its reasoning process but also enhances user interface design for interactions, paving the way for more digestible outputs of complex queries.

Emerging Insights from DeepSeek’s Research

DeepSeek’s substantial contribution came with their publication titled “DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning.” This work identifies two variants of their model: DeepSeek-R1-Zero, which employs reinforcement learning exclusively, and DeepSeek-R1, which combines supervised fine-tuning with reinforcement learning. This layered approach indicates a progression towards models that not only learn from vast datasets but actively refine their reasoning abilities through trial and error.

Learning through Trial and Error: The Power of Reinforcement Learning

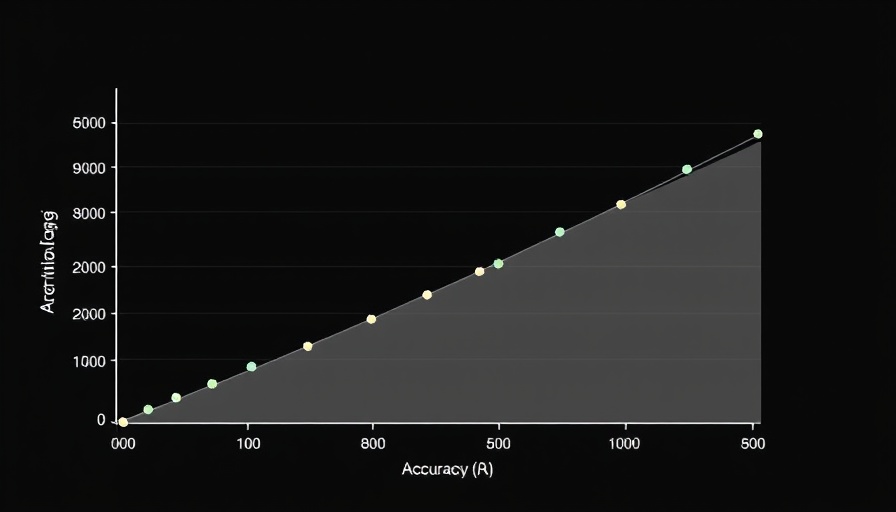

Reinforcement learning (RL) stands out as an effective machine learning strategy for developing reasoning models. Rather than relying on pre-defined examples, RL allows models like DeepSeek-R1-Zero to learn through experience, receiving rewards based on the efficacy of their responses. This method promotes an environment where unexpected reasoning abilities can emerge, ultimately leading to improved performance.

Unpacking Advanced Reasoning Techniques

Building on the foundations laid by models like o1 and DeepSeek-R1, the community is continually exploring techniques to enhance reasoning in LLMs. Techniques like Chain-of-Thought (CoT) prompting guide models to provide detailed reasoning paths, increasing accuracy on complex tasks. Self-consistency methods enhance reliability by averaging results from multiple reasoning approaches, while Tree-of-Thought (ToT) prompting explores various reasoning pathways, enriching the decision-making process.

Implications for Digital Transformation in Enterprises

For executives in fast-growing companies embracing digital transformation, understanding these advancements in AI is crucial. The capabilities unveiled by OpenAI and DeepSeek indicate an era where AI can perform more complex reasoning tasks akin to human thought processes. This not only offers opportunities for improved operational efficiencies but also enables organizations to leverage AI-driven insights for better strategic decision-making.

As AI continues to evolve, exploration of these advanced models will likely unlock applications that redefine the interaction between technology and human problem-solving capabilities.

Add Row

Add Row  Add

Add

Write A Comment