Unpacking the Cost Management Challenges in Generative AI

The rise of generative AI services has transformed the landscape of software as a service (SaaS), pushing firms to gracefully balance scalability with cost. Notably, CEOs, CMOs, and COOs are now at the forefront of these discussions, focusing on how to optimize operational expenditures while delivering cutting-edge services to diverse clientele. In multi-tenant environments, this challenge is magnified, as different customers often exhibit vastly distinct usage patterns.

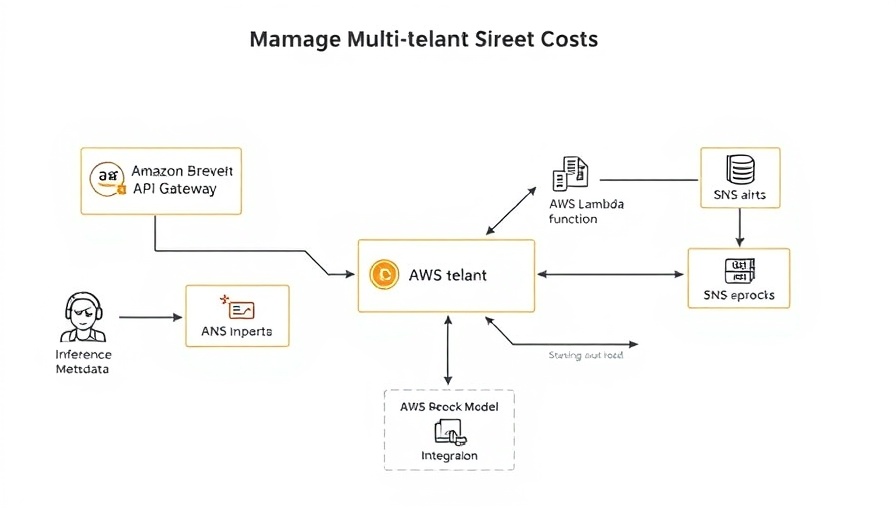

The Importance of Application Inference Profiles

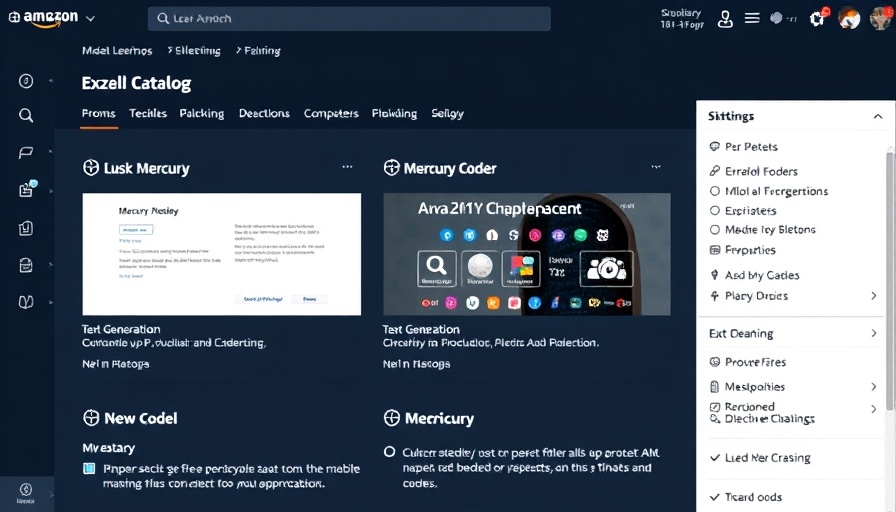

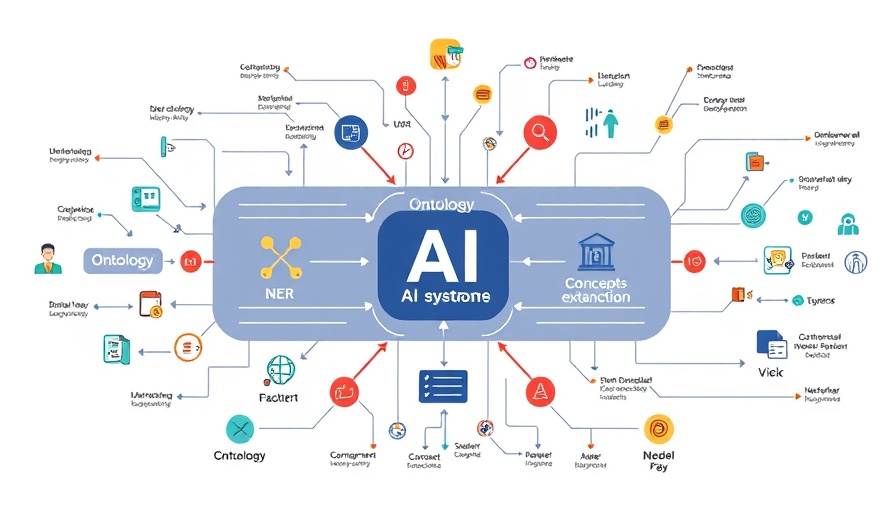

Amid the complexities of cost allocation, Amazon Bedrock introduces an innovative solution through application inference profiles. This feature allows companies to tag and track their data consumption intricately, which is indispensable for businesses aiming to deploy generative AI effectively. By accurately recording the usage per customer or tenant, organizations can not only implement precise cost management strategies but also pave the way for potential optimization specific to each usage pattern.

Flexibility in Monitoring and Notifications

Proactive management of resources is not merely beneficial but a necessity in financial forecasting. A traditional binary alerting system—indicating either normal operation or crisis—is insufficient in a nuanced environment with varying levels of resource consumption. A multi-tiered alert system, which classifies alerts from green (normal) to red (critical), enables organizations to respond adaptively to both minor fluctuations and major spikes in usage.

Transforming Cost Allocation with Tagging Mechanisms

Through the strategic use of tagging, application inference profiles enable organizations to create a logical framework for resource attribution. By assigning metadata such as TenantID, business unit, or ApplicationID, businesses can gain a clearer picture of their cost landscape. This precision not only aids in managing financial implications but also highlights optimization opportunities tailored to tenant needs, thus reinforcing the value of each customer relationship.

Future Trends in AI Cost Management

As the AI industry accelerates towards more complex systems and versatile applications, understanding cost implications and managing operational risks will become increasingly critical. Future trends indicate a shift towards more adaptive systems that can leverage data analytics for real-time decision-making. This evolution will likely change how organizations perceive AI implementation from a cost center to a strategic asset.

Conclusion: Take Proactive Steps in Managing AI Costs

Ultimately, navigating the intricacies of AI cost management takes more than just technical ingenuity; it demands a strategic focus on resource allocation and client relationships. By utilizing tools like application inference profiles, organizations can not only avert financial overruns but also innovate in ways that cater to dynamic business environments. In an era where adaptability defines success, it’s essential for leaders to stay ahead by establishing robust frameworks tailored to their unique operational models.

Add Row

Add Row  Add

Add

Write A Comment