Unlocking AI Potential: Benchmarking Custom Models on Amazon Bedrock

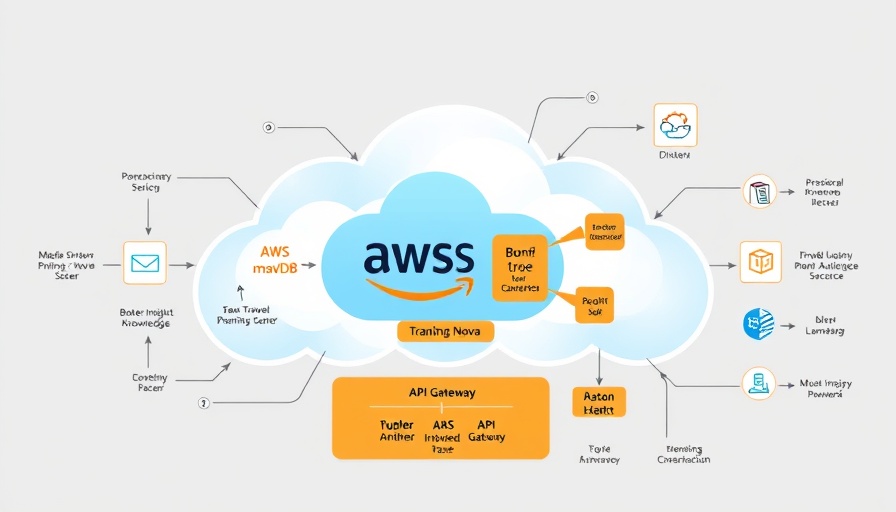

In an era where artificial intelligence (AI) is becoming a linchpin for organizational transformation, the deployment of customized AI applications has never been more critical. Amazon Bedrock emerges as a powerful solution that allows companies to train and fine-tune large language models (LLMs) while maintaining control over costs and deployment complexities. However, a fundamental question persists: how can organizations ensure their AI models meet performance expectations before they go live?

Understanding the Need for Benchmarking in AI Deployments

Before delving into the nitty-gritty of performance benchmarking, it's essential to understand what lies at the heart of AI deployment: effectiveness. As stated in the AWS blog, deploying AI models can consume a significant portion of project time—up to 30%—due to complexities in optimizing instance types and configuring parameters. Benchmarking becomes a pivotal step to preemptively identify potential production issues, such as throttling or performance bottlenecks, enabling businesses to verify that their models can handle expected loads efficiently.

Leveraging Open Source Tools for Optimal Performance Evaluation

The use of open-source tools like LLMPerf and LiteLLM allows organizations to conduct comprehensive performance evaluations on models deployed through Amazon Bedrock. By simulating load tests, LLMPerf creates responses through concurrent Ray Clients that provide insights into model performance, specifically around critical metrics like latency and throughput. This capability not only aids in assessing immediate operation needs but also highlights the long-term viability of LLMs across various tasks, from coding to chatbots.

A Practical Approach: Step-by-Step Benchmarking on Bedrock

1. **Model Setup**: Before benchmarking, ensure you have your custom model on Amazon Bedrock. If you haven’t imported one yet, following the provided step-by-step instructions should get you started.

2. **Invocation and Configuration**: Using LiteLLM for model invocation allows users to standardize inputs while ensuring that different models can communicate effectively via the Amazon Bedrock APIs.

3. **Benchmarking Configuration with LLMPerf**: It’s crucial to configure your token benchmark tests accurately. By defining parameters like mean and standard deviation for input and output token counts, organizations can simulate real-world demand effectively.

Case Study: Understanding DeepSeek-R1 Models

Consider the example of testing a DeepSeek-R1-Distill-Llama-8B model hosted on Amazon Bedrock. By adjusting parameters for token counts and monitoring metrics, organizations can yield insightful reports that inform on response times, throughput, and even costs associated with model usage. This modeling and benchmarking are particularly pertinent for businesses looking to fine-tune their AI strategies in alignment to real-time data and performance insights.

Cost Estimation and Cloud Integration: Adding Value to Your AI Strategy

An additional benefit of performance benchmarking through platforms like Amazon Bedrock is the integration of cost estimation processes via Amazon CloudWatch. By aggregating metrics regarding the active model copies that Bedrock needs to support during testing, firms can better manage their expenditure, enhancing overall budget allocations toward AI initiatives.

Conclusion: Realizing the Vision of AI in Business

The landscape of AI is dynamic, demanding organizations embrace a proactive approach to benchmarking performance against expectations. With resources like Amazon Bedrock, organizations can not only deploy models but can also ensure they are performant and cost-effective through ongoing evaluation and adjustment strategies. For executives—CEOs, CMOs, and COOs—understanding these methodologies could unlock new pathways for innovation and efficiency within their corporations.

Add Row

Add Row  Add

Add

Write A Comment