Unlocking the Power of Attention Mechanisms in Digital Transformation

The attention mechanism represents a significant advancement in machine learning, utilized primarily to enhance the capabilities of models like RNNs and transformers, particularly in tasks like machine translation (MT). This method empowers models to become more context-aware, capturing long-range dependencies that traditional architectures struggle with. As businesses, especially fast-growing companies and C-suite executives, embrace digital transformation, understanding attention mechanisms is crucial for maximizing AI capabilities.

Understanding Attention: Key Concepts

The essence of attention mechanisms lies in their ability to prioritize essential information within complex datasets. In traditional RNNs, predicting a word in a sequence relied heavily on previous outputs, often leading to the vanishing gradient problem. With the introduction of attention, the model can focus on the most relevant words at any given instance, effectively reversing this limitation.

For instance, in a translation from English to Italian, when predicting the next Italian word, the model zeroes in on critical English words rather than attempting to process the entire sequence equally. This targeted approach bolsters accuracy in translation and other sequential tasks, making attention mechanisms indispensable.

Transformers and Self-Attention: A Game Changer

Although the attention mechanism is recognized for its role in transformer architecture, its principles can be traced back to earlier models like RNNs. The primary feature of transformers is self-attention, allowing the model to evaluate relationships among elements within the same input sequence. This capability leads to a matrix of attention weights, offering a glimpse into how various tokens correlate with one another. As businesses accumulate vast amounts of data, harnessing self-attention mechanisms can enhance insights and decision-making processes.

The Mechanics Behind Attention Weights

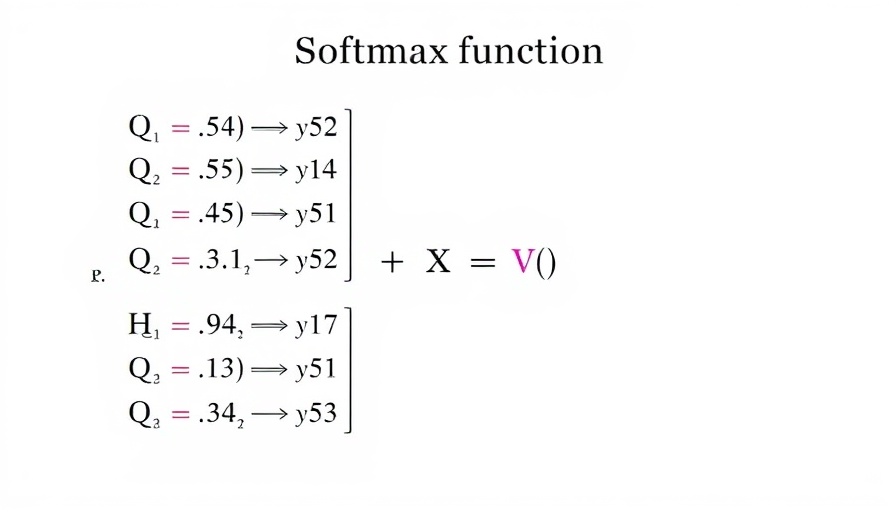

To implement attention mechanistically in models, we first tokenize the input sentence into a series of numerical vectors. Each token not only represents a word but also encompasses three critical vectors: key (K), value (V), and query (Q).These vectors facilitate the model's understanding of each token's significance relative to others.

In operational terms, the process involves multiplying the query vector by key vectors, accompanied by a scaling factor, resulting in scores that indicate the relevance of relationships between tokens. By applying a softmax function, these scores are normalized into attention weights, ready for further processing. For organizations adopting AI solutions, leveraging these mechanics can dramatically improve the performance of machine learning models.

Future Insights: Attention in an AI-Driven Business Environment

The future landscape of AI will undoubtedly be shaped by advancements in attention mechanisms. As companies seek to enhance productivity and innovation, the ability of AI models to focus attention smartly will be pivotal. Organizations across various sectors—technology, healthcare, finance—are already witnessing transformative changes by implementing more sophisticated models that utilize attention mechanics. This trend indicates a growing recognition of the value attention provides in pinpointing crucial data amidst expansive datasets.

In conclusion, for fast-growing companies and C-suite executives, understanding and adopting attention mechanisms is not merely optional; it's essential for thriving in today’s competitive digital landscape. Investing in AI solutions that capitalize on these advancements could be the key to unlocking substantial business growth and operational efficiency. Stay ahead by exploring attention-driven methodologies that enhance your capabilities and drive innovation.

Add Row

Add Row  Add

Add

Write A Comment