Understanding AI Hallucinations

In the rapidly evolving landscape of generative AI, one of the pressing challenges faced by organizations is the phenomenon of AI hallucinations. This term refers to instances where AI models generate information that may seem plausible but is either inaccurate or entirely fabricated. Such occurrences can lead to misinformation and have severe implications, especially in critical industries like healthcare, finance, and decision-making processes within organizations. As AI technology continues to integrate deeper into corporate frameworks, addressing and minimizing hallucinations becomes paramount for CEOs, CMOs, and COOs looking to leverage AI responsibly.

New Solutions to Tackle Hallucinations

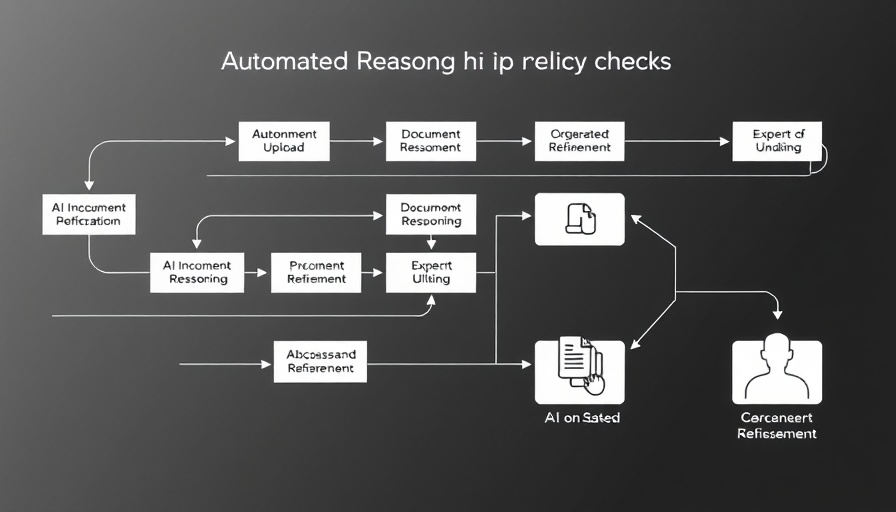

Recently, Amazon unveiled a solution to tackle these disturbances with its Amazon Bedrock platform. This innovative system incorporates automated reasoning checks designed to enhance the reliability of generative AI outputs. By leveraging AWS's powerful architecture, organizations can integrate these checks to verify information generated by AI models before it is disseminated, thereby improving the accuracy and trustworthiness of the content.

Why This Matters Now

As industries increasingly depend on AI for analytical processing and content creation, the need for precise and factual outputs has never been more significant. Hallucinations can not only undermine trust but also skew decision-making at the executive level. The implementation of automated reasoning checks comes at a critical juncture when organizations are racing to adopt AI tools not just for efficiency, but to foster innovation and maintain competitive advantage.

Real-World Applications and Impact

Consider the implications of an AI generating misleading medical advice; it could endanger lives or result in costly errors for healthcare providers. Similarly, in finance, an AI hallucination could trigger misguided investments, affecting stock prices and market integrity. Amazon Bedrock's reasoning checks aim to mitigate these risks by serving as a reliability layer on top of generative models. This proactive approach aligns perfectly with the growing emphasis on ethical AI usage amid scrutiny from stakeholders who demand transparency and accountability.

Future Predictions for AI Safety

As AI technology continues to advance, it is predicted that mechanisms like automated reasoning checks will become standard practice across all sectors utilizing AI. The adoption of safety measures is expected to accelerate, with organizations investing in robust frameworks to ensure AI-generated content meets stringent accuracy standards. This initiative could lead to regulatory changes tailored to enforce these practices, thereby instilling greater confidence among end-users and stakeholders.

Take Action for AI Integration

For organizations aiming to harness the power of generative AI, now is the time to fortify your strategies by integrating trusted technologies like Amazon Bedrock’s automated reasoning checks. This step not only safeguards against misinformation but also enhances the credibility of the AI solutions implemented, ensuring they are a driving force in your organizational transformation.

In conclusion, as AI continues to evolve, leaders must remain vigilant and proactive in adopting tools that not only boost productivity but also uphold accuracy and integrity. Embracing solutions like Amazon Bedrock can place your organization at the forefront of responsible AI usage, setting the stage for sustainable growth and innovation.

Add Row

Add Row  Add

Add

Write A Comment