Understanding the Importance of Accurate AI in Healthcare

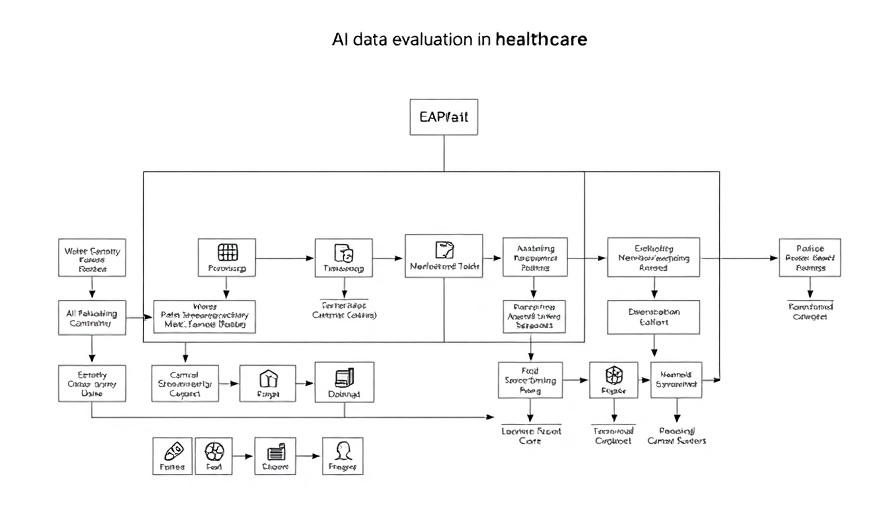

As healthcare continues to evolve through the integration of artificial intelligence (AI), the accuracy of generative AI applications becomes paramount. The recent advancements in evaluating these systems using the LLM-as-a-judge approach on AWS illustrate a significant leap towards ensuring clinical accuracy and reliability in AI-generated medical content. The innovative framework developed by AWS not only focuses on performance metrics but also leverages the robustness of large language models (LLMs) to refine the evaluation process, which is crucial in high-stakes environments like healthcare.

What Is the LLM-as-a-Judge Framework?

This evaluation framework acts as a critical mechanism for assessing generative AI applications in healthcare by utilizing advanced models hosted on Amazon Bedrock. By interlinking the retrieval of medical knowledge with established clinical standards, the LLM-as-a-judge framework enhances the trustworthiness and actionable insights of generated medical reports. The performance parameters—correctness, completeness, helpfulness, logical coherence, and faithfulness—offer a multi-dimensional approach to evaluating AI outputs, ensuring that they not only adhere to technical standards but also meet the nuanced needs of clinical practice.

The Role of Retrieval-Augmented Generation

One of the standout features of this evaluation methodology is its inclusion of Retrieval-Augmented Generation (RAG) techniques. By combining information retrieved from knowledge bases with generative capabilities, AI systems can more effectively reference relevant medical context, thus reducing hallucinations and improving overall accuracy. This technique is particularly valuable in healthcare settings where the stakes of miscommunication can be significantly higher than in other industries.

Comparing Performance Metrics

The performance results from the evaluations conducted using the dev1 and dev2 datasets reveal the framework's effectiveness. Scores like 0.98 for correctness and 0.99 for logical coherence underscore the ability of these generative models to produce reliable content. As AI systems are increasingly integrated into healthcare diagnosis and treatment protocols, these kinds of rigorous performance benchmarks will become essential for maintaining high standards of patient care.

Future Implications for Generative AI in Healthcare

The advancement in methodologies for evaluating generative AI applications raises important questions about the future of healthcare technology. As these tools become increasingly integrated into clinical practices, continuous assessment will be vital to evolving medical standards and practices. This necessity points to an opportunity for organizations to invest in AI metrics that ensure safety, accuracy, and efficiency through systematic evaluations that adapt to emerging medical knowledge and practices.

Why This Evaluation Framework Matters to CEOs, CMOs, and COOs

For leaders in the healthcare sector, understanding and leveraging the potential of these AI tools is not merely a technology upgrade but a strategic initiative impacting patient care and organizational effectiveness. Embracing AI solutions with robust evaluation techniques positions healthcare organizations to capitalize on improved patient outcomes and operational efficiencies.

In conclusion, the introduction of the LLM-as-a-judge evaluation framework and RAG techniques is a pivotal step forward in ensuring that healthcare generative AI applications meet the stringent demands of clinical practice. As the reliance on automated insights grows, ensuring their reliability through rigorous evaluations will be fundamental to navigating the complexities of modern healthcare.

In this era of digital transformation, it’s time to equip your healthcare organization with proven AI strategies that enhance accuracy and efficiency. Explore AWS solutions today and ensure your operational frameworks are equipped for the future.

Add Row

Add Row  Add

Add

Write A Comment