The Future of Explainable AI in Financial Services

As economic landscapes transform, financial services institutions (FSIs) are increasingly relying on generative AI to streamline operations and enhance decision-making processes. However, the move toward AI deployment brings forth challenges, particularly in ensuring transparency and verifiability of outcomes. AWS has recognized these issues, launching Automated Reasoning checks for Amazon Bedrock Guardrails to provide much-needed clarity and compliance.

What Are Automated Reasoning Checks?

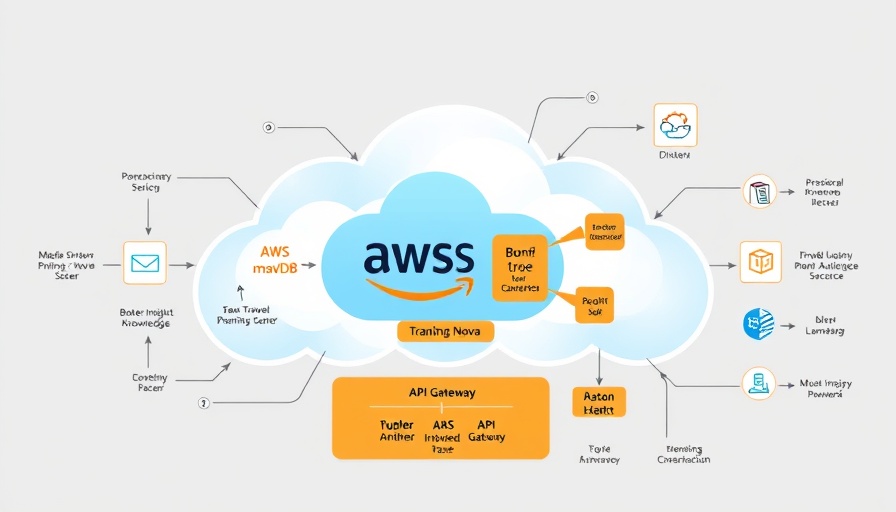

Automated Reasoning checks utilize formal logic and mathematical principles to verify the processes within generative AI systems. Unlike traditional machine learning methods that produce probabilistic outcomes, these checks offer deterministic results. By rigorously evaluating the inputs and outputs against a set of predetermined rules, FSIs can confidently adopt AI solutions that require policy compliance and regulatory transparency.

Why Explainability Matters in Financial Services

For the financial sector, a lack of explainability in AI-driven outcomes can result in significant regulatory risks and operational inefficiencies. Institutions like NASDAQ and Bridgewater have begun using foundational models (FMs) powered by generative AI; nevertheless, they face scrutiny regarding the transparency of these models. Automated Reasoning checks allow organizations to demonstrate how decisions are made, thus fostering trust in their AI tools.

Real-World Applications: Validating Complex Processes

Several scenarios within FSIs highlight the versatility of Automated Reasoning. Consider the underwriting process in insurance, which often involves complex regulations regarding acceptable risk levels. Automated Reasoning checks can streamline the validation of these processes by breaking down rules into logical components and providing deterministic outputs. This facilitates more efficient decision-making, aligning closely with regulatory frameworks.

Mitigating the Risk of Hallucinations in AI

One of the critical concerns with generative AI is the risk of model hallucination, where an AI generates inaccurate or unreliable information. This can pose a significant challenge to organizations operating in highly regulated industries. Automated Reasoning checks directly address this by validating AI outputs and ensuring they align with compliance requirements. This assurance is paramount for maintaining decision integrity in financial services.

A New Era for Auditable AI Workflows

The integration of Automated Reasoning checks within financial services workflows marks a pivotal advancement in AI governance. By offering mathematical certainty and clear audit trails, FSIs can confidently navigate the complexities of regulatory environments and ensure adherence to evolving standards.

Embracing these technologies is no longer optional; rather, it is a necessity for modern financial institutions aiming for sustainable growth in a highly competitive landscape. Those ready to adopt these innovative processes will not only enhance operational excellence but also improve stakeholder trust and satisfaction.

Add Row

Add Row  Add

Add

Write A Comment