Revolutionizing Video Processing: ByteDance's AI Innovations

In a rapidly evolving digital landscape, ByteDance is making notable strides in video processing by leveraging advanced AI technologies. The company, known for popular platforms like TikTok, harnesses multimodal large language models (LLMs) to process billions of videos daily, enhancing user engagement and content moderation capabilities.

Cost Efficiency Meets Performance

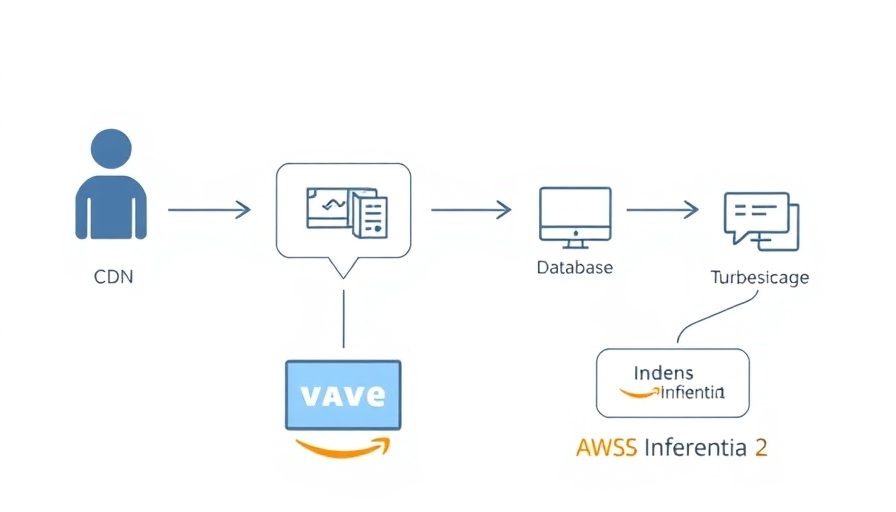

Collaboration with Amazon Web Services (AWS) has led to substantial improvements in operational efficiency. By deploying their models on AWS Inferentia2 chips, ByteDance has managed to cut inference costs by up to 50%, delivering significant cost savings without compromising performance. This innovation represents a paradigm shift in how companies can utilize AI for video understanding.

Advanced Multimodal LLMs: Harnessing Multiple Data Types

At the heart of ByteDance's approach is the use of multimodal LLMs. These models analyze various data types, including video, audio, and text, enabling a holistic understanding of content. This capability is crucial for enhancing user experience and ensuring compliance with community guidelines. The ability to integrate these diverse inputs allows for more nuanced content analysis, moving beyond traditional methods that often silo data types.

The Future of Content Understanding and Community Safety

An exciting prospect lies ahead as ByteDance aims to create a unified multimodal LLM capable of processing all content types seamlessly. This ambitious project could revolutionize how video and content analysis is conducted, promising improved efficiency and effectiveness in moderating and understanding vast amounts of digital content.

Strategic Optimization Techniques for Enhanced Performance

ByteDance has implemented several optimization techniques that contribute to the performance of their video understanding systems. From tensor parallelism to sophisticated compiler optimizations, the organization has fine-tuned their systems to maximize throughput and efficiency. The use of static batching and quantization techniques has further enhanced the capacity to handle large amounts of data effectively, highlighting the critical synergy between hardware and software in AI applications.

The Broader Impact on AI and Media Regulation

As AI technologies mature and become more accessible, the implications for media companies and regulators are profound. The application of such technologies raises questions about data privacy, ethical AI use, and content moderation standards. ByteDance's model presents a case study of how AI can potentially balance innovation with the need for safe and responsible content curation.

Conclusion: A Vision for Tomorrow

As ByteDance continues to innovate and refine its AI approaches, it sets new industry standards for video processing and understanding. The integration of multimodal large language models with advanced hardware indicates a future where AI not only enhances user experiences but also ensures that platforms remain safe and inclusive. This is not just a technological evolution; it's a commitment to empowering creativity while addressing the complexities of content management in today’s digital world.

Add Row

Add Row  Add

Add

Write A Comment