Revolutionizing AI Development with Amazon SageMaker

In the rapidly evolving landscape of Artificial Intelligence, foundation models (FMs) are at the forefront, presenting significant computational challenges and opportunities. With the demand for unprecedented computational scale, pre-training these models often requires thousands of accelerators running continuously for extended periods—sometimes reaching months. This need for computational power has necessitated the development of distributed training clusters. These clusters effectively parallelize workloads across hundreds of accelerators, utilizing frameworks such as PyTorch, and seamlessly integrate with advanced AWS infrastructure.

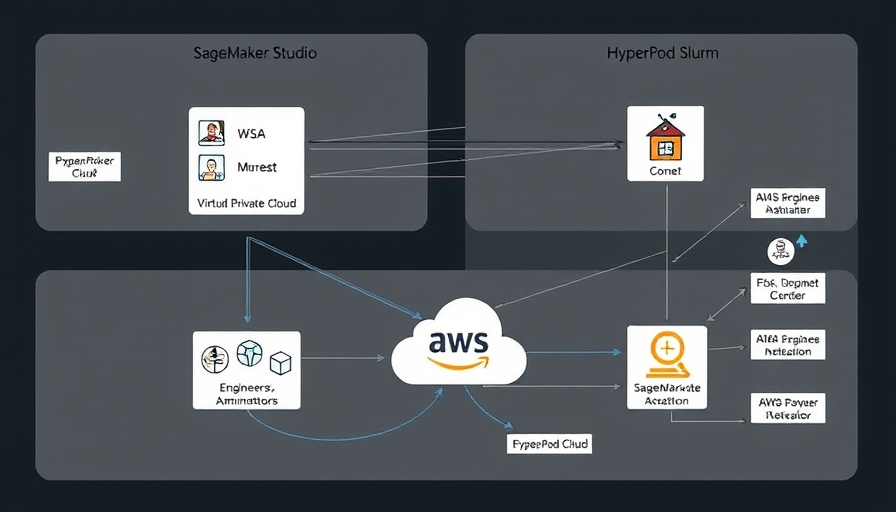

Exploring SageMaker HyperPod: A Game Changer

Amazon SageMaker HyperPod represents a pioneering solution tailored for large-scale foundation model training. It establishes resilient clusters specifically designed for machine learning workloads, enabling developers to train state-of-the-art frontier models without frequent interruptions. By launching health monitoring agents across each instance, SageMaker HyperPod addresses infrastructure reliability. If a hardware failure is detected, the system automatically repairs or replaces the faulty instance, thus ensuring that training resumes swiftly from the last saved checkpoint. This innovative approach greatly minimizes downtime and enhances the overall training process.

Maximizing Developer Experience with Amazon SageMaker Studio

Although robust infrastructure plays a crucial role, an equally important aspect is the developer experience. Traditional machine learning (ML) workflows often create silos, leading to inefficiencies as developers oscillate between local Jupyter notebooks and production jobs managed separately via SLURM or Kubernetes interfaces. Amazon SageMaker Studio addresses this fragmentation by providing a unified, web-based interface that circumvents the inconveniences associated with siloed workflows. With SageMaker Studio, data scientists and engineers can collaborate more effectively and make more informed decisions about resource allocation during model training.

The Imperative for Resilient AI Structures

The intricate nature of distributed training means that resilience is paramount. Essentially, for every training step, it is crucial that all participating instances finalize their calculations before proceeding, which can complicate matters when executing tasks on massive clusters. Each failed instance can jeopardize the entire training run, particularly as cluster size increases. As such, tooling that anticipates and addresses these challenges is critical for organizations looking to leverage AI for transformative business strategies.

Future Directions and Considerations in AI

As the AI landscape shifts towards increasingly complex models, the tools we use for orchestration—like SageMaker HyperPod and SageMaker Studio—will need to evolve continuously. The ongoing refinement of these systems will play a pivotal role in how organizations incorporate AI into their core operations. It is also worth noting the high Total Cost of Ownership (TCO) associated with large-scale ML workloads, which necessitates exploring cost-effective solutions without compromising performance.

The shift towards AI-driven decision-making can transform how organizations approach challenges and opportunities. By prioritizing resilient frameworks and seamless collaboration tools, firms can not only enhance their AI capabilities but also ensure that they are positioned at the cutting edge of innovation.

Add Row

Add Row  Add

Add

Write A Comment